What is Artificial Intelligence (AI) and its relation to Machine Learning (ML)

There is currently some disagreement on the definition of AI based on what is meant by ‘intelligence’. The definitions are mainly in 2 groups:

Thinking and Acting Humanly: The Cognitive Definition (Strong AI)

Spearman (1904) defined general intelligence as the capacity to learn and use common sense. At the time, many psychologists measured intelligence by focusing on specific skills independently, such as the ability to solve math problems and the ability to navigate social situations. Spearman argued that to truly capture a person’s intelligence, researchers must assess the combined abilities. The Turing Test, proposed by Alan Turing (1950), was designed to provide a satisfactory operational definition of intelligence. Turing defined intelligent behaviour as the ability to achieve human-level performance in all cognitive tasks, sufficient to fool an interrogator. An intelligent machine would need to possess the following capabilities (Russel & Norwig, 2003):- natural language processing to enable it to communicate successfully;

- knowledge representation to store information provided before or during the interrogation;

- automated reasoning and causal inference to use the stored information to answer questions and to draw new conclusions;

- adapting to new circumstances and to detect and extrapolate patterns including generalisation

Adapting to changing environment including generalisation

In order to adapt to a new environment, an agent needs to know how to deploy its skills and problem-solving capabilities, which were acquired in another environment, into the new one. This process is supported by the ability to abstract and generalize the specific skills and their intervention target object, in order to subsequently specialize them again in the new scenario. Of course, the adaptation is guided by the ability to recognize the similarities and differences between the two environments, and to use this knowledge to decide what information to use from the abstraction step (Booch et al, 2020). The abstraction and generalisation also applies to objects it was trained on. Humans will nearly always identify a bike or fire engine in different configurations. So called AI systems can be duped or confounded by situations and object configurations they haven’t seen before. A self-driving car gets flummoxed by a scenario or object that a human driver could handle easily (Bergstein, 2020). These shortcomings have something in common: they exist because nearly all of today’s so called AI solutions do not have a model of an object. They may have an internal model but this is not a model anyone understands and the ML algorithm cannot explain why it made a decision based on its internal model. We call this model-free ML algorithm. The ML algorithms also do not understand the causal relationship between objects which is explored next.Causality and Reasoning

A ML algorithm driving a car detects a ball rolling on the street and identifies that it is no danger so continuous driving full speed. Then a child steps on the road, who the sensors had not detected as he was behind other walking pedestrians. A human could reason that when a ball rolls on the street that he needs to slow down and be careful as most likely someone is going to come to get the ball. This requires causation and reasoning abilities. Judea Pearl’s work led to the development of causal Bayesian networks. The improvements that Pearl and other scholars have achieved in causal theory haven’t yet made many inroads in deep learning, which identifies correlations without too much worry about causation (Bergstein, 2020). Causal AI can move beyond correlation to highlight the precise relationships between causes and effects. Causal AI identifies the underlying web of causes of a behaviour or event and furnishes critical insights that predictive models fail to provide (Sgaier et al., 2020). Others including myself have the view that not all of ML is part of AI (Raschka, 2020a; Perez-Breva, 2018) where only an 'intelligent' subset of ML is part of AI as shown as shown below (Raschka, 2020b). As per Raschka (2020a), while machine learning can be used to develop AI, not all machine learning applications can be considered AI. For instance, if someone implements a decision tree for deciding whether someone deserves a loan based on age, income, and credit score, we usually do not think of it as an artificially intelligent agent making that decision (as opposed to some “simple rules”) This is also reflected in the wikipedia entry which I had updated Machine Learning, Relationship to AI, but which got reverted.

Approaches to AI that are not based on machine learning include the symbolic representations or logic rules, which can be written or programmed by observing a human expert performing a particular task. Systems that are developed based on observing human experts are called expert systems. The symbolic approach to AI is now sometimes called “Good Old-Fashioned Artificial Intelligence” (Haugeland, 1989). From a programming perspective, one can think of such expert systems as complex computer programs with many (deeply nested) conditional statements (if/else statements). The most promising AI work is inspired by the human brain and called neuroscience-inspired AI. Few start-ups are working in this field. If more resources would be spend in this area, (strong) AI would be reality within 5-10 years. In his book, “The Book of Why: The New Science of Cause and Effect” (Pearl, 2019) he argues that artificial intelligence has been handicapped by an incomplete understanding of what intelligence really is (Pearl, 2018). This in particular related to the widely accepted new definition of AI which is described next.

Acting Rationally: The Rational Agent Definition

Building algorithms that satisfies the cognitive definition requirements was rather complex and difficult. So, in order to revive the work on AI and get funding after a long AI winter some clever so called 'AI experts' came up with a new definition, which is now used in most textbooks (Russel and Norvig, 1995; Nils, 1994; Poole at al 1998): AI is the study of "intelligent agents": any device that perceives its environment and takes actions that maximize its chance of successfully achieving its goals. This deceptive definition, is very similar to the Reinforcement learning (RL) defintions: learning by interacting with an environment to maximise a reward or an agent taking actions in an environment in order to maximize the notion of cumulative reward (GeeksforGeeks, 2020). RL is part of Machine Learning (ML). Machine Learning is a field concerned with the development of algorithms that learn models, representations, and rules automatically from data (Raschka, 2020). The rational agent definition of AI implies very limited or no real ‘intelligence’ as the ‘intelligent agent’ does not need to be able to adjust to a changing environment, neither does it require reasoning or generalisation. This definition of (weak) AI means that all of Machine Learning is a subset of AI, as shown in the Figure below, which is now widely accepted. In reality it is just the illusion of 'intelligence' using maths and statistics with lots of data (called big data) and more processing power, but no undestanding of the outputs. It is very impressive what has been achieved with big-data ML and its applications will be very useful for humanity. Big-data ML is a positive step forward but it is most likely not going to achieve (strong) AI.

Risks of AI

Recently this topic has got a lot of attention due to a letter signed by many so-called AI experts to stop development of 'AI' more powerful than GPT4.Risks of big-data ML (aka weak AI)

The first time the manipulation of humans by big-data ML was documented is the Facebook - Cambridge Analytica scandal. Here data was harvested from fb users without their knowledge and based on the users profile custom posts were presented to them. The posts were then tweaked based on the interaction performance. The human manipulation was based on neuroscience/psychology knowledge that all humans have some bias when making decisios and taking actions. If the bias is very strong then these humans are 'hacked' to reinforce their fears and biases. Yuval Harari, the author of Sapiens (a great summary of the history of humankind), argues that AI will be used to hack humans if there is no AI regulation. In reality this 'hacking' is already happening but it can get much worse.Gary Marcus has a list of risks in his article about AI and AGI risks: -Phishing and online fraud can be created faster, much more authentically, and at significantly increased scale.

-The ability of LLMs to detect and re-produce language patterns does not only facilitate phishing and online fraud, but can also generally be used to impersonate the style of speech of specific individuals or groups. This capability can be abused at scale to mislead potential victims into placing their trust in the hands of criminal actors.

-In addition to the criminal activities outlined above, the capabilities of ChatGPT lend themselves to a number of potential abuse cases in the area of terrorism, propaganda, and disinformation. As such, the model can be used to generally gather more information that may facilitate terrorist activities, such as for instance, terrorism financing or anonymous file sharing. Geoffrey Hinton left Google AI to warn about the dangers of AI misinformation. He got a lot of attention as the supposed godfather of AI. In reality he is the godfather of deep neural networks and biologically implausible backpropagation. Time will tell if his work contributed or hindered reasearch towards real AI.

Risks of real AI (aka strong AI and AGI)

There is a fear that strong AI may become super-intelligent and put an end to human hegemony, bringing balance to our planet. Others think that AGI could create an existential risk for humans. For both short and long-term risks some kind of regulatory framework is required. Europe, UK and the US are already working on an AI framework.Narrow and General AI

AI can be further categorized into Artificial General Intelligence (AGI) and Narrow AI. AGI is focused on the development of multi-purpose AI mimicking human intelligence across tasks. AGI implies general and strong AI. Narrow AI focuses on solving a single, specific task (chess/Go playing, image interpretation, natural language processing, label classification, car driving, playing a computer game, and so forth). There has been great progress in the last few years optimising AI algorithms for specific applications. However, there has been very little progress on AI algorithms to have any broad cognitive ability including generalisation. Deepmind seems to have developed a weak and general AI called Gato (Reed et al, 2022) and this is also mentioned on the AGI page of Wikipedia, although my view is that it will only achieve real intelligence (strong AI) if it can generalise and understand the information. Although a general agent for many tasks it cannot really generalise information as claimed by some. Achieving weak and general AI is tremendous progress and will enable many new applications.Strong and Weak AI

Weak AI is made up term to accommodate the masses who use the AI buzzword for big-data ML that in reality has no human level intelligence. It is the rational agent definition. AI systems that learn by interacting with the environment but where the learning is not transferable to even modestly different circumstances (eg to a board of different size, or from one video game to another with the same logic but different characters and settings) without extensive retraining are called weak AI. Such systems often work impressively well when applied to the exact environments on which they are trained, but we often can't count on them if the environment differs, sometimes even in small ways, from the environment on which they are trained. Such systems have been shown to be powerful in the context of games, but have not yet proven adequate in the dynamic, open-ended flux of the real world (Marcus 2020). I would argue that none of these systems are on the path to human level intelligence and therefor there is no AI yet. The future of AI is extremely bright if we look at what has been already achieved with big-data ML (weak AI). Changing these data hungry algorithms that do not understand anyting while learning, to have an explainable model-based approach would open the door for many new breakthroughs and is the next step in the evolution towards real AI. Playing Atari with Deep Reinforcement Learning (Mnih et al, 2013) would be narrow and weak AI. Vicarious is proposing generative causal mode that will do better (Kansky et al, 2017). Sadly Vicarious was now also acquired by Alphabet after buying DeepMind. The problem with Alphabet is that they stop long-term strategic reseach towards strong AI (wasting all the neuroscience resources) to work on short-term tactical weak AI research to monetise low hanging fruits as weak AI has also many applications. Deepmind Alpha Go (Silver et al, 2016) would be a narrow and weak AI.Limitations of big-data ML (aka weak AI)

Weak AI is the rational agent definition which implies that all ML algorithms are a subset of AI even if it cannot:- Adapt to a changing environment and Generalise

- Explain

- Understand Causation and Reason

Autonomous Driving

Autonomous driving without human intervention will never be possible in the real-world with big-data ML aka weak AI. The reason is that weak AI cannot apply it's training data to situations it was not trained on. Autonomous driving could however be possible in geo-fenced environments where not many obstacles are present. This could be on a campus or in a dedicated truck lane. The real world is dynamic and open-ended and no amount of memorisation of training data will make it possible to react to unexpected driving conditions. Another issue is that the objects which the weak AI was trained on may appear in configurations that the ML algorithm does not recognise as it lacks the ability to generalise. The best we will be able to achieve with weak AI is the subconscious driving equivalent when there are no unexpected incidents or obstacles during driving. As weak AI is just some glorified statistics optimising some weights, nobody understands the internal representation of the black boxes internal model. Some humans are very naive and believe articles/videos about fully autonomous cars or trucks. The reality is that the technology for autonomous driving (level-5 autonomy) does not exist yet so when you see a self-driving car or truck there is always a human in the loop to take-over, in an unexpected condition. Alternatively, it could be test circuit in a geo-fenced area. Bill Gates wrote an optimistic article on the future of autonomous vehicles. Meanwhile it is nearly uunbelievable that the first hands-free assisted driving vehicle allowed to drive on UK highways is only Level-2 by Ford whereas it is known that Level-3 technology is already avaialable. Let’s look at some news articles which confirmed the above mentioned limitations of weak AI: Even After $100 Billion, Self-Driving Cars Are Going Nowhere - Bloomberg Amazon Abandons Autonomous Home-Delivery Robot Scout in Latest Cut - Bloomberg Ford, VW-backed Argo AI is shutting down - TechCrunch Autonomous vehicles from Waymo and Cruise are causing all kinds of trouble in San Francisco California law bans Tesla from advertising its electric cars as ‘full self-driving’ - The Independent Tesla calls self-driving technology 'failure,' not fraud - Los Angeles Times Tesla Autopilot Crashes: With at Least a Dozen Dead, “Who’s at Fault, Man or Machine?” Weird Incidents Reveal L5 Challenges - semiengineering.com How self-driving cars got stuck in the slow lane - Guardian Apple Scales Back Self-Driving Car and Delays Debut Until 2026 and then it will not be self-driving but assisted drivingAutonomous Military Applications

The largest risk to humanity due to AI is now as we have only dumb and weak AI which will exactly do what it is told without any common sense, understanding cause and effect and without the ability to explain why it took certain actions to achieve its objective. The military or police may not understand how limited the capability of the autonomous military systems are which are sold to them as 'intelligent'. Imagine the technology which is not mature enough to drive a car, making life or death decisions. Only humans with very limited intelligence would put weak AI in charge of making decisions to kill, without human intervention.Strengths of Weak AI

The weak and narrow AI paradox: AI algorithms have excelled at solving specific tasks without displaying any intelligence Big data ML is great in non-changing fixed environments such as computer games or diagnosing health conditions and large language models (LLM). ML has a feature (ability to process large amounts of data using maths and statistics) that humans do not have and this provides these algorithms the illusion of intelligence although the algorithms have no understanding of what they are doing. There are deceptive news articles like this one: ‘The Game is Over’: Google’s DeepMind says it is on verge of achieving human-level AI. Here a so called AI expert repeats what many other so-called AI experts believe: that scaling up Deep Learning will result in human level intelligence. This kind of deception may only achieve more funding for Deepmind, who will soon realise that scaling Deep Learning will never result in human level intelligence. In fact, DeepMind started with neuro-science inspired work towards real intelligence but after being acquired by Google (now Alphabet) changed to carry out tactical ML based work churning important weak AI deliverables and misinformation. Microsoft Research joined the bandwagon of misinformation by claiming that GPT-4 has sparks of AGI.Games

There is extensive use of ML in computer games. Deepmind AlphaGo was the first computer program to defeat a professional human Go player, the first to defeat a Go world champion. If the game conditions are predictable and not changing, weak AI with lots of data will have super-human performance. The data does not need to be training data. The algorithms are able to learn to play a game to maximise the reward without undestanding anything about the game. Does the Deepmind software understand the game Go? (Lyre, 2019) The answer is No. If we were to change the size of the gameboard the ML algorithms would have to re-learn the game related probabilities from scratch.Medical Applications

Big-Data ML aka weak AI will revolutionalise healthcare applications. There are still many applications of this technology that have not resulted in a commercial product yet. Robots doing difficult operations with a human doctor in the loop will soon not be sci-fi anymore. Whenever the weak AI detects an abnormality for which it was not trained it hands control back to the human doctor who may ask the robot to abort the operation. The Role of Artificial Intelligence in Early Cancer DiagnosisBioelectric Nose

Imagine that in the next 5-10 years there will be electronic noses that can detect health problems including if you have COVID from your breath. The issue is that scientists were not able to replicate the organic sensors in the nose that are a result of millions of years of evolution. Here are articles that summarise the latest in this field.When will AI master smell?

Market Perspectives and Future Fields of Application of Odor Detection Biosensors— A Systematic Analysis

Applications and Advances in Bioelectronic Noses for Odour Sensing Some companies that already have products: Owlstone Medical Breath Biopsy

Aryballe Neose Advance

Alpha-Mos Heracles NEO

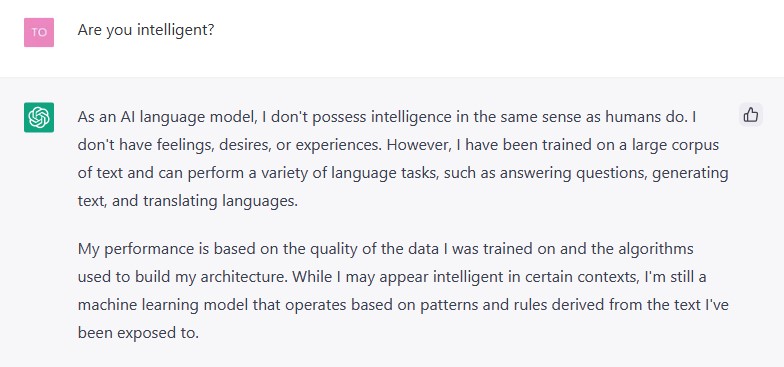

Large Language Models (LLMs)

There has been a lot of progress with large language model (LLM) applications such as chat bots and again there was evidence how naive some humans are by quickly believing that these chat-bots are intelligent or even are sentient. A paper called Large Languange Models (LLMs) and the Reverse Turinng Test (Sejnowski, 2022) argues that if we would observe humans interaction with LLM we could determine quite a bit about those humans. There is also an interesting point that many human's do not think animals are sentient or 'intelligent' as they cannot communicate with us. Then a chatbot with no 'intelligence' but just using stats and vectors appears 'intelligent' to them. The media then jumps on nonsense statements that AI has become sentient.First there was a lot of publicity for Language Model for Dialogue Applications (LaMDA) from Google

Then came ChatGPT from OpenAI. This is now at version 3 and version 4 will be released soon. It was claimed that ChatGPT is a threat for Google, but is it?

So although sometimes brilliant and able to write great essays and get Tinder dates, these algorithms have no understanding of any of the concepts they are writing and also no feelings. Therefore calling these developments as 'intelligent' or 'sentient' just shows that some humans have very limited intelligence and understanding of the technology used.

My comments criticising as nonsense statements that ChatGPT3 and 4 has advanced reasoning ability have been deleted in facebook groups. There is a serious effort to mis-inform the public giving the impression that they have nearly achieved human-level intelligence and create fear that these algorithms will create mass unemployment, whereas they create new opportunities for humans as enablers.

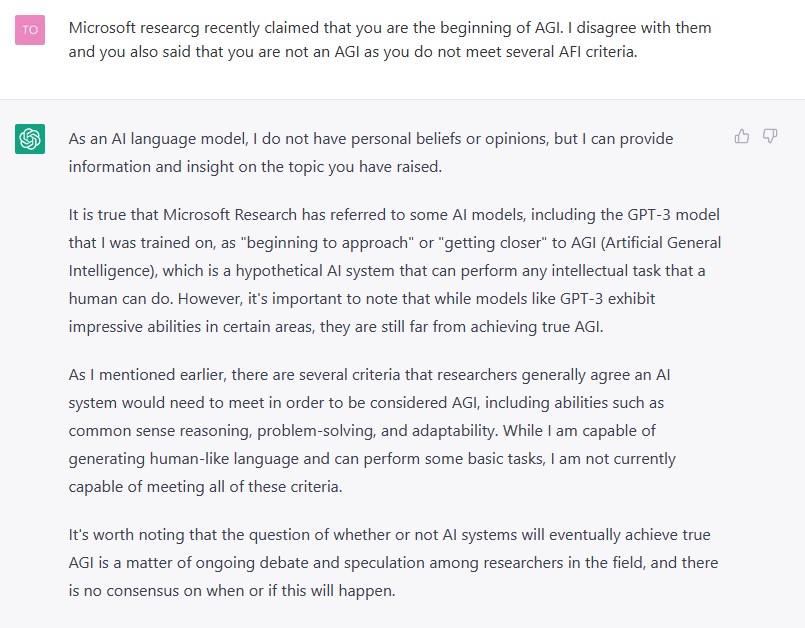

Scaling these LLMs will most likely never result in intelligence or sentience as language is more than just a combination of words according to some vectors or weights. This is also the view of Noam Chomsky and Gary Marcus. There is a nice article by Ben Goertzel that sums up my views. Here is an article on 3 things that LLMs are still missing. ChatGPT4 immitates a human well although it hallucinates and makes mistakes. It is highly recommended to fact-check all outputs of ChatGPT4. Microsoft Research published a paper claiming that ChatGPT-4 had sparks of AGI. There was general agreement that this was nonsense including GPT-4 (screenshots below), Bill Gates post and Gary Marcus post.

A few papers that represent my views:

A few papers that represent my views:On the Dangers of Stochastic Parrots

Against AI Understanding and Sentience: Large Language Models, Meaning, and the Patterns of Human Language Use The basic architecture and algorithmics underlying ChatGPT and all other modern deep-NN systems is totally incapable of general intelligence at the human level or beyond, by its basic nature.

Such neural networks could form part of an AGI, sure – but not the main cognitive part. -Ben Goertzel What is AI chatbot phenomenon ChatGPT and could it replace humans?

ChatGPT Is a Stunning AI, but Human Jobs Are Safe (for Now)

An AI that can write is feeding delusions about how smart artificial intelligence really is

ChatGPT is not all you need. A State of the Art Review of large Generative AI models, Jan 2023

Art

The applications of big-data ML aka weak AI are endless. This became obvious when ML algorithms were used to create Art. DALL-E and then DALL-E 2 by OpenAI were able to create impressive pieces of art based on text input. It uses a version of GPT-3, mentioned in the previous section, modified to generate images. Meanwhile Silicon valley awarded OpenAI with $1 billion in funding and Microsoft invested (and had invested before) a couple of billions. A competitor stability.ai went viral when it provided an unrestricted API to app providers. The dataset used for the Stable Diffusion is based on a 5 billion image dataset scraped off the internet by laion.ai. This dataset contains many pictures from artists who were not compensated. This dataset also contains many sexualised pictures. Stable Diffusion from stability.ai provided a public announcement that “the model may reproduce some societal biases and produce unsafe content.” There is actually information how DALL-E2 training data is filtered. So any app using Stable Diffusion is indirectly using stolen art and this would be easy to fix by purchasing or licensing a large set of pictures and screening for explicit and pictures with bias. This would take some effort but would be a solution to also provide the original artists of the ingested pictures some income. As expected we have the first lawsuits from artists and Getty Images for scraping their pictures. Although the original picture generation was text based, one company had the idea to provide app users an interface to upload 5-10 selfies to generate avatars using the Stable diffusion dataset. This idea was then copied by many other apps. There have been some privacy concerns on the storing of the uploaded selfies. The issue as explained above is that the app uses the Stable diffusion dataset to generate the avatars without any royalties paid to the artists whose pictures were ingested in the massive 5 billion picture dataset. An AI generated Picture Won an Art prize and artists were not happy. Photo service Getty is banning AI-generated Images due to the copyright concerns of the training data. A.I.-Generated Art Is Already Transforming Creative WorkPicture Limitless Creativity at Your Fingertips

For a balanced view although I do not agree with this statement as AI has IMO no real creativity. Your Creativity Won’t Save Your Job From AI

AI Is Coming For Commercial Art Jobs. Can It Be Stopped?

Path to Strong AI

One path that is surely going to result in strong AI is to use the human brain as inspiration. Humans create a model of the world and their predictions and actions and based on this model. It is important to note that the model is not fixed and humans continously learn and update the model. Most humans have an inaccurate model of the world and this results in wrong predictions and actions as can be seen from the state of our planet. Majority of humans also have a very strong bias about their incorrect model of the world. So despite scientific evidence they do not update their world-model with anything that contradicts their bias. However they are open to pseudo-science and deceptive social media posts reinforcing their incorrect model. Examples are humans who believe the moon landings never happened or that 9/11 was an inside job or that being gay is not natural as a religious book says so. Hypothatically if an AI would be indoctrinated with a lot of misinformation it could also create a wrong model and take actions that could result in many human deaths. This risk exists as the military of super-powers are secretely working on AI to use it for global dominance. Technologists called for OpenAI to stop training GPT for at least six months, citing "profound risks to society and humanity". But should we really fear the rise of AGI?. I think this is the wrong question as LLM will most likely not lead to AGI. The real threat is LLMs being used for malicious purposes by humans. Gary Marcus wrote a post how he is not afraid of the technology but of humans using it. As there is already a strong foundation on deep learning and reinforcement learning the easiest (but maybe not the best) approach is to use the existing building blocks to reach strong AI. Strong AI experts think this is the wrong approach. Josha Benigo who is the co-author of the widely used Deep Learning book, has an interesting presentation on how to progress from Deep Learning to Causality and Generalisation. He also co-authored two interesting papers on this topic (Javed et al, 2020 and Scholkopf et al, 2021). A Path Towards Autonomous Machine Intelligence by Yann LeCun. DeepMind has an interesting paper on using a world-model to master diverse domains (Hafner D. et al, 2023), where RL learns a model of the world. The AGI society and OpenCog founded by Ben Goertzel are some of the open source initiatives towards strong AI. Strong AI or AGI will not be achieved by just adding causal reasoning to weak AI (Bishop, 2022). My view is that one path to strong AI is the explainable world model-based approach which will make it possible for the agent to adapt to a changing environment and generalise. Numenta founder Jeff Hawkins has two great books on neuroscience inspired AI and he is also one of the main supporters of the model-based AI paradigm. Designing Ecosystems of Intelligence from First Principles by Karl Friston. It would be great to apply the idea from Friston to the Thousand brain model from Jeff Hawkins. There is supposed to be a Journal of Artificial General Intelligence but I could not download any of the open-acess Issues.IEEE Transactions on Artificial Intelligence has interesting papers including a recent one on recent advances in trustworthy Explainable Artificial Intelligence. Distributed intelligence is an idea based on the fact that information in the brain is represented by the activity of numerous neurons. Sometimes even multiple neurons competing as there is redundancy build in. A recent article called A New Approach to Computation Reimagines Artificial Intelligence has some interesting ideas that are being worked on.